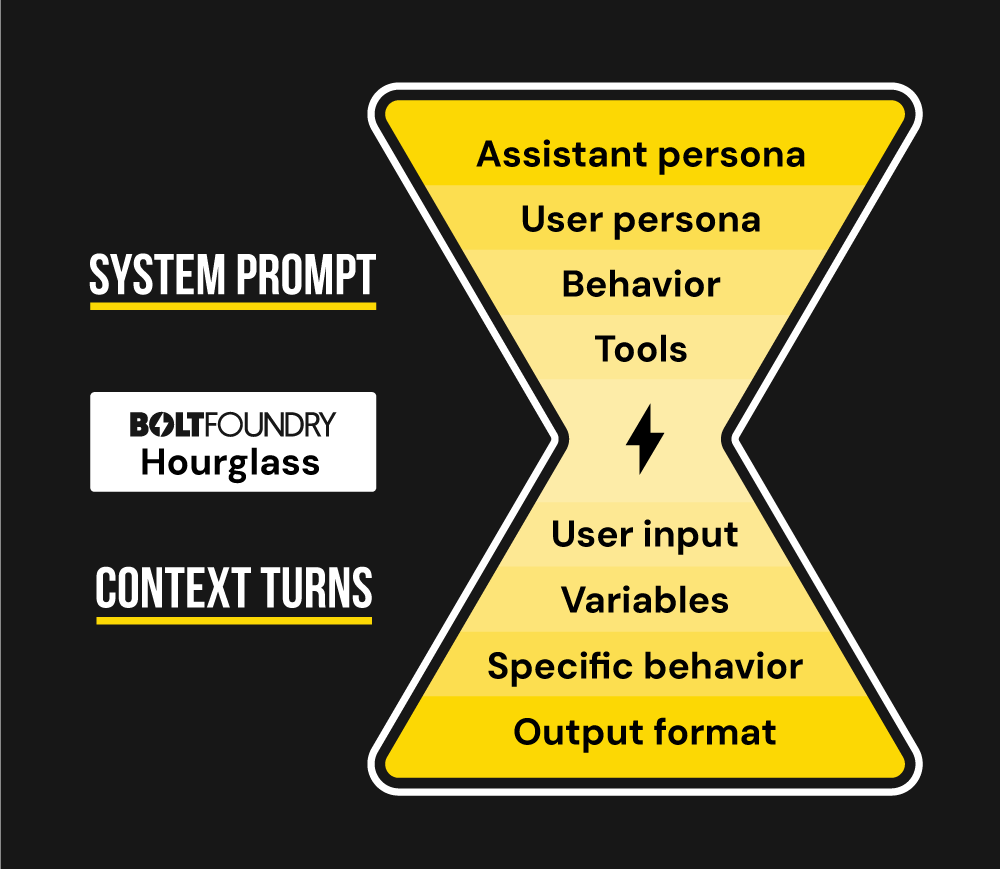

Context engineering 101: The hourglass

We hesitate to say "use this one simple trick to improve your prompts by 100%" but honestly, it might be true.

It turns out if you talk to LLMs like you talk to people, they actually perform better. I studied communication in college, and learned there’s a few techniques you can use to help people understand a topic better.

The simplest one? Inverted Pyramid. In a nutshell, if you start with the most crucial information at the top, and work your way down to background info, the flow helps people get the most important information as quickly as possible. If they need to know more they keep reading.

Inverted pyramid system prompts

At Bolt Foundry, we’re building out a system for this, but the concepts are pretty universal. Essentially, you want to describe to the assistant the “Who / Why” before the “What”, and then lastly the “How”.

We’ll post more about this, but guiding an LLM into its role, instead of telling it precisely what to do and what not to do, means the LLM will be able to perform better than if you give it a list of do’s and don’ts. The Waluigi effect is less likely to happen if you “soften” the params, and make it less like a recipe and more like a dossier.

NEVER INCLUDE VARIABLES IN SYSTEM PROMPTS

It’s in caps for a specific reason: System prompts are the most valuable tool in guiding an LLM. They’re the first information the assistant sees, and LLM developers are building chain of command into everything they do. System prompts should contain developer specific messages which cannot be overridden.

DO NOT INCLUDE INFORMATION IN YOUR SYSTEM PROMPT WHICH CAN OVERRIDE YOUR INTENTION.

Additionally, prompt caching means that providers are more likely to start inference in the middle (cached) than at the beginning, and if you change your system prompt all the time you lose out on this opportunity.

(There’s a bunch more I’ll explain later, but trust me: DO NOT MAKE SYSTEM PROMPTS DYNAMIC.)

Add context to user turns

Okay, so if you’re not allowed to put variables in the system prompt (SERIOUSLY DON’T) then how do you include them?

User turns, of course.

But then what if you don’t want to make a bunch of calls over and over just to get the assistant up to speed?

Then don’t do that. Just make synthetic user turns.

Context is the bottom of the hourglass

Did you know you can just send the OpenAI (compatible) completion endpoint an array of messages? Like, they don’t have to be generated by the LLM before… you can just pretend the LLM said them and the LLM will complete them.

So just create synthetic user turns, and provide all of your context in the synthetic user turns. Importantly, make it so the user turns are ordered from least important to most important. My recommendation is “user info” i.e. user supplied content, then any context you want to provide, and finally the output format you want.

You need to provide assistant turns just like you would if it was real, but just make the assistant ask for the precise information you need.

OK, but seriously how do I know this works?

I’ll have data for you soon, but aibff (the Bolt Foundry tool for doing evals / decks / cards / contexts / samples) will let you build this stuff just using markdown.

It’s really the earliest days for us, so the best way to find out what’s going on is this newsletter, or boltfoundry.com if you care. Also, reach out to me, x.com/randallb.